Intro

Yes, it’s another OpenAI blog post (although it’s my first one, so i’m definitely late to the party).

In this post, we’ll explore how to utilise an OpenAI endpoint from a Jupyter workspace within Domo. Our objective will be to translate data from a dataset, from English into multiple languages and to then provide users with the option to switch the language of the content they see via a filter at the dashboard level.

We’ll be using the following components to do this:

- Domo Stories Layouts

- Domo Variables

- Domo Dataset Views

- Domo Jupyter Workspaces

- OpenAI

To give you an idea, here’s what the end result will look like: we have a filter at the top of the page to select a language, which changes the language of the departments in the bar chart series labels and in the data table, you can try it out below…

Process & Prerequisites

The general flow will be to take our dataset, which contains a column of departments in English, and load it into a Jupyter workspace. From there, we will extract the unique list of departments, pass them to OpenAI, and receive translations in three languages: German, French, and Spanish. We will then use Dataset Views to join the newly translated columns back onto our original dataset before creating the dashboard.

In the dashboard, we’ll utilise variables to allow the user to select a language, which will replace the relevant department column with the chosen language.

before we get started you will need an account with OpenAI, this is free and comes with some credits to use against their API. This example cost me less than 10 cents of the $18.00 free developer credits I have with my account.

Once set-up grab your API key from this page.

Starting Dataset

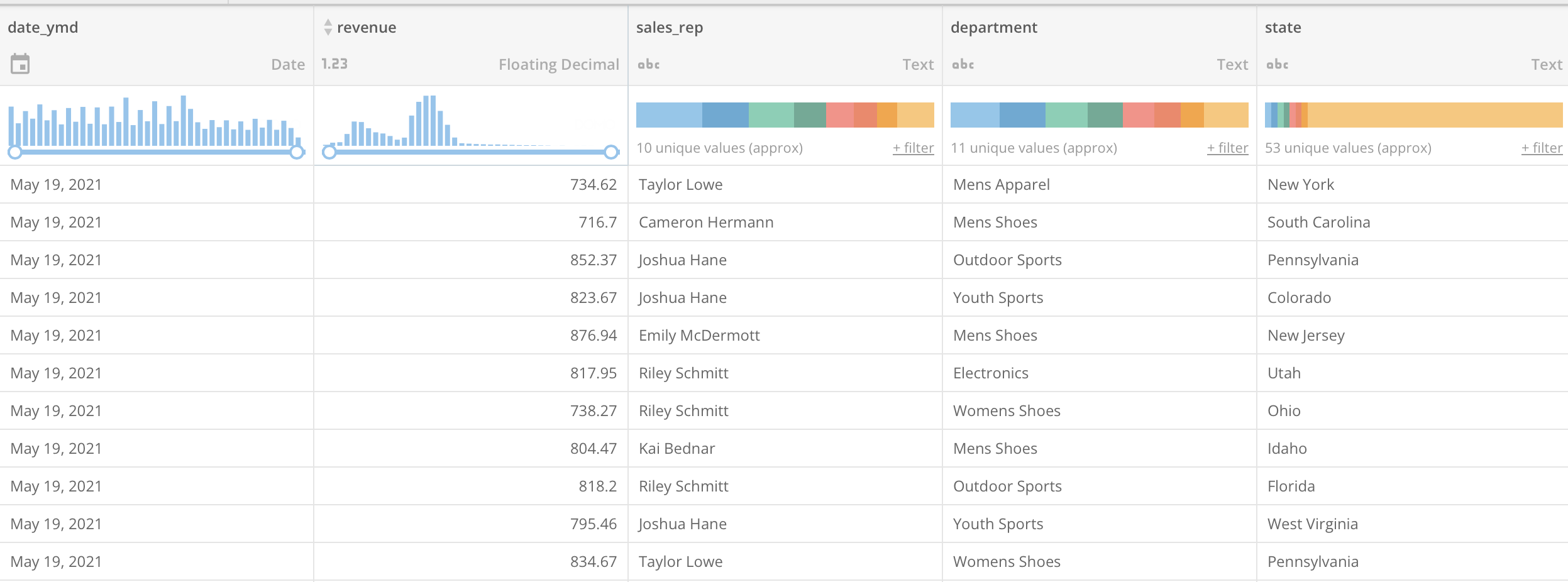

Here’s a view of our starting dataset with the department column we’ll be translating…

Jupyter

I’ll create a new Jupyter workspace in Domo with the kernel set to Python, i’ve added my dataset as an input and specified an output to contain our translated departments…(my standard disclaimer applies here, i’m not a data scientist, so it’s very possible there’s a better way to write this code).

If you’d like to just clone the whole workbook in to your Jupyter you can do so from my GitHub here.

| |

| |

| |

| |

| |

| |

| |

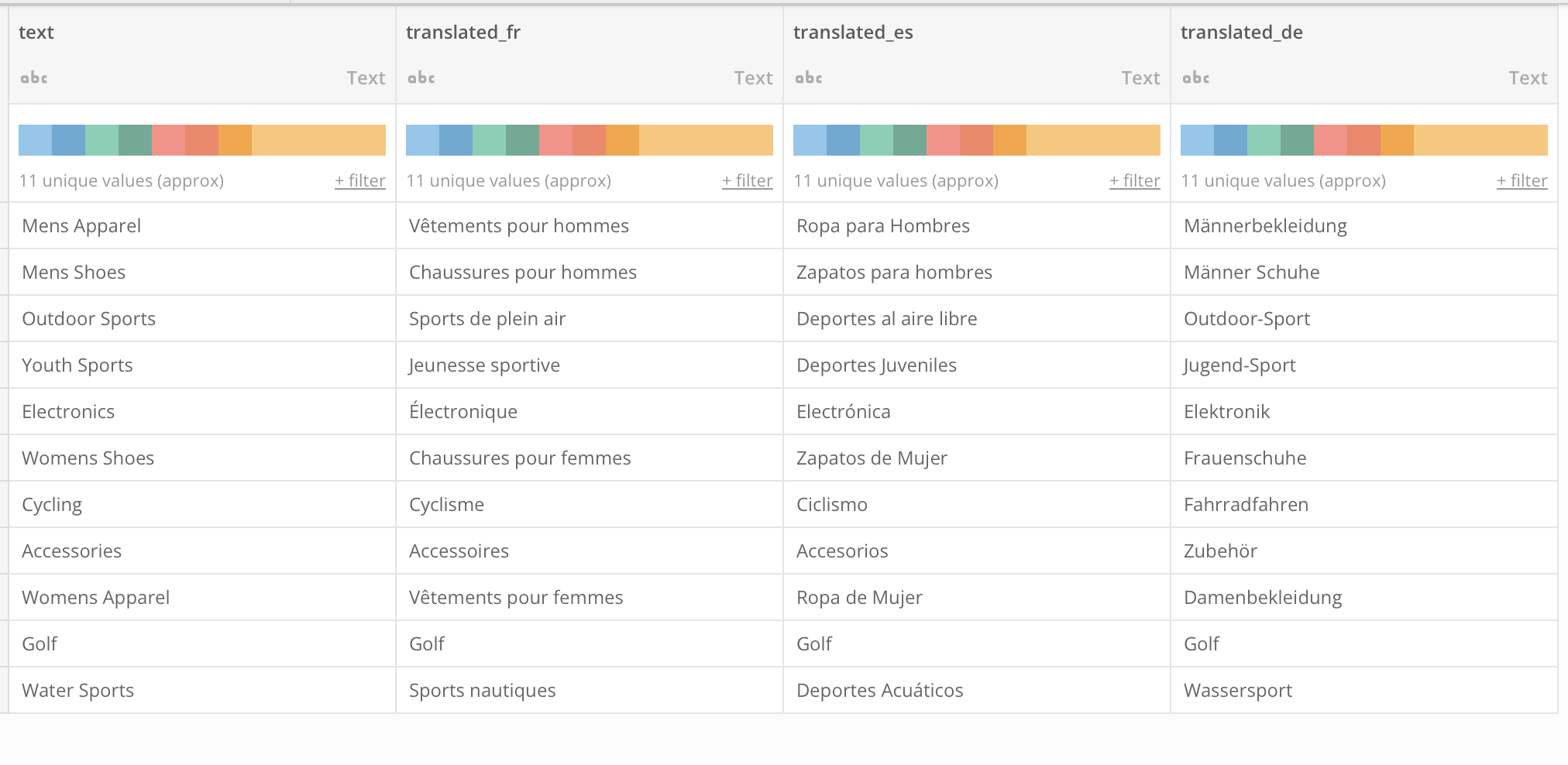

After the notebook runs I have an output dataset that looks like the dataset below, with 1 column per language for each department. we’re ready to join this back on to our original dataset, now at this point you could do this in Jupyter, you could do it in Magic ETL and you could do it it in a Dataset View, it’s really up to you and what your own use-case dictates.

To keep it simple, i’m going to join using a Dataset View where i can rename my new columns with a suffix of the language code, so i have department, department_es, department_de etc.

Visualisation

Next we’ll build out some charts and a variable.

Create a new chart, I’m using a bar chart where I want the series to be the department name which can be changed by the variable.

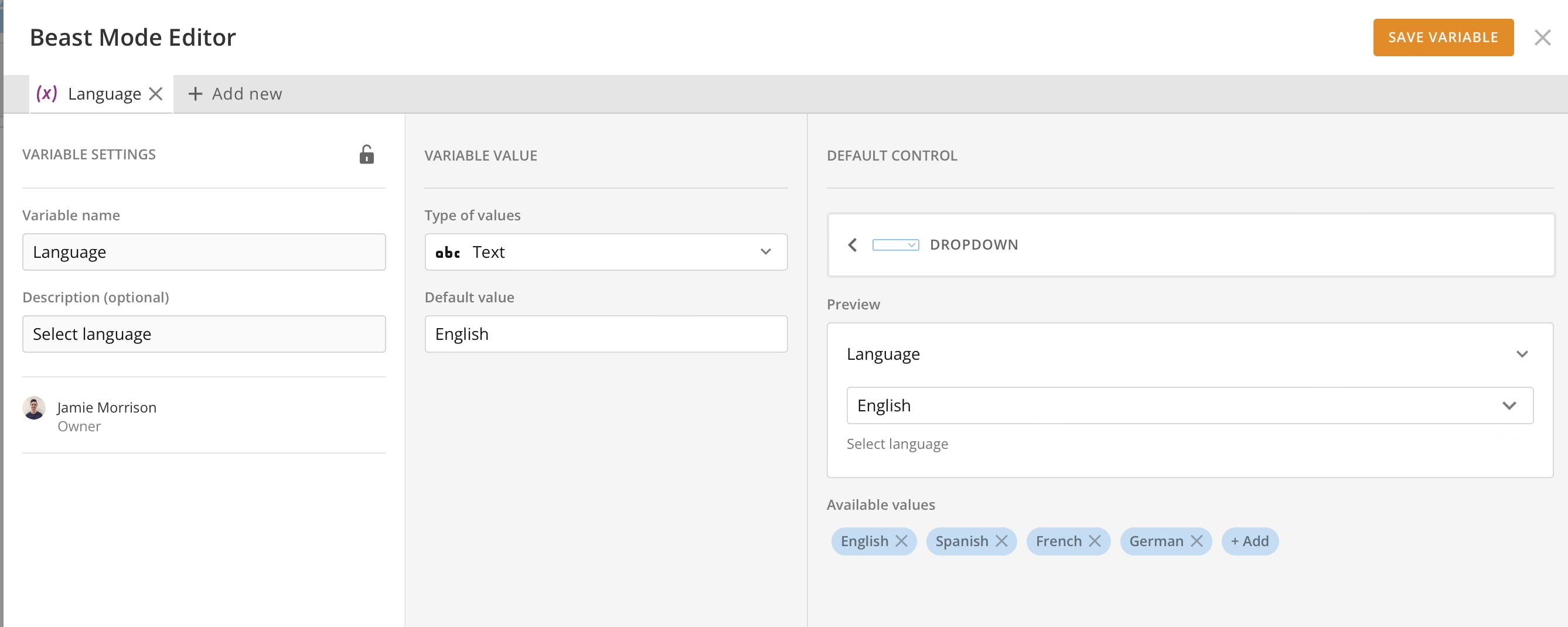

I’ll create a new variable:

Then a beast-mode to handle the switch with the following code, where the ‘Language’ column is the variable we just created.

| |

Finally, we can add the variable control to the page And we’re done!

Closing Thoughts

This is just one example of an OpenAI integration for language translation, and it was surprisingly quick and easy to set up and to integrate with Domo. OpenAI provides a range of sample and boilerplate code that you can lift and drop in to your own solutions and workflows to quickly get going with some testing an exploration of the technology. I think there’s likely to be so many more uses for this in the data and analytics space that we’ll see cropping up as the technology continues to develop. We’re already seeing integrations with NLP for data analysis as well as code generation and AI as a pair programmer (see GitHub Co-Pilot) for app development.